Detecting Movement with Pairwise Frame Subtraction

Do I want to read this? This post is about extracting the moving portion of the current video frame, by combining the difference images of itself with the frames either side of it. It’s super simple, and can be surprisingly powerful.

Background & Foreground

Far more often than not, the foreground of an image contains all the interesting information, while the background is, well… background. Think of cars moving on a road, tennis balls flying over a court, or animals passing a camera trap. Cars, balls and tigers are more interesting than tarmac, grass and trees.

To detect objects or regions in the foreground of an image, we can just compare the image in question to one of the empty scene (the background image) and work out what’s changed. This is called background subtraction.

So, explicitly: Two images, one of the empty background and one containing something of interest on that background are subtracted from one another, on a pixel-by-pixel basis. That is, the absolute difference between each of their pixel values is calculated. Any pixel that has changed value by more than a threshold amount is marked as foreground. The images used are grayscale, so pixels are represented by a single intensity value.

Here’s a simple example written in Python using OpenCV:

import cv2

import cv2.cv as cv

# Read two images and convert to grayscale

img1 = cv2.imread('path/to/image1.jpg', cv.CV_LOAD_IMAGE_GRAYSCALE)

img2 = cv2.imread('path/to/image2.jpg', cv.CV_LOAD_IMAGE_GRAYSCALE)

# Take the pixel-by-pixel absolute difference of the two images

diff = cv2.absdiff(img1, img2)

# Set every pixel that changed by 40 to 255, and all others to zero.

threshold_value = 40

set_to_value = 255

result = cv2.threshold(diff, threshold_value, set_to_value, cv2.THRESH_BINARY)

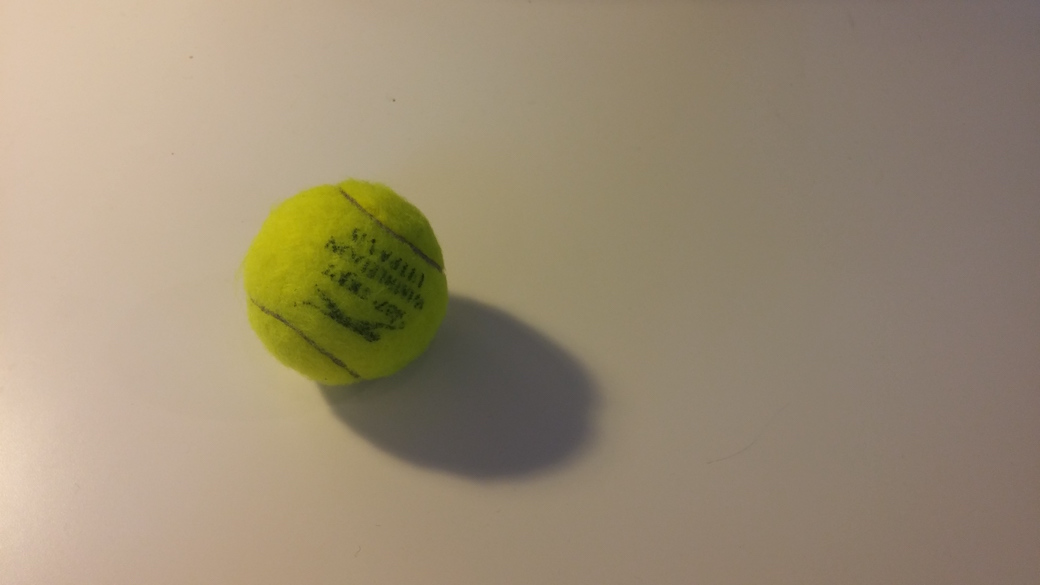

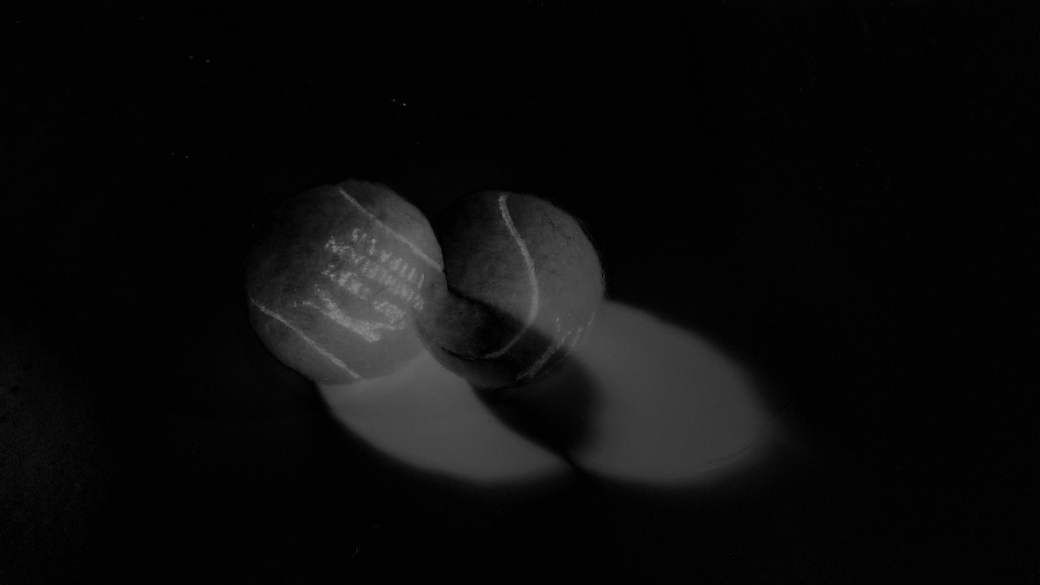

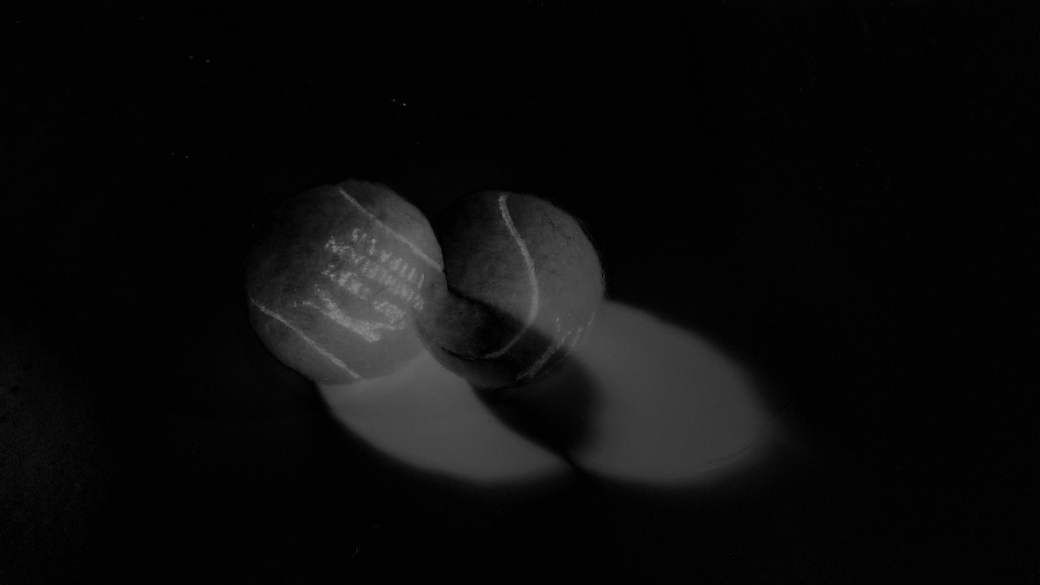

And here’s the effect of the above process on two images:

Adjacent frames

In the above example, we compared our target image with one we had purposefully taken of the empty scene. This works really nicely, but it’s a little unrealistic if we want to detect moving objects in real video sequences.

In many cases, we don’t have access to a really great image of the completely empty scene to use as our background image, or even more commonly the ‘background’ may not actually be empty and still at all. This is often the case with real video sequences, especially those recorded outdoors or by hand.

A common technique is to compare the current frame with the immediately previous one. By using the previous frame as reference, our background ‘model’ is constantly updating to represent the scene as it was last observed. Any difference in pixel intensity then has to be caused by inter-frame movement of objects in the scene.

Naive Motion Detection

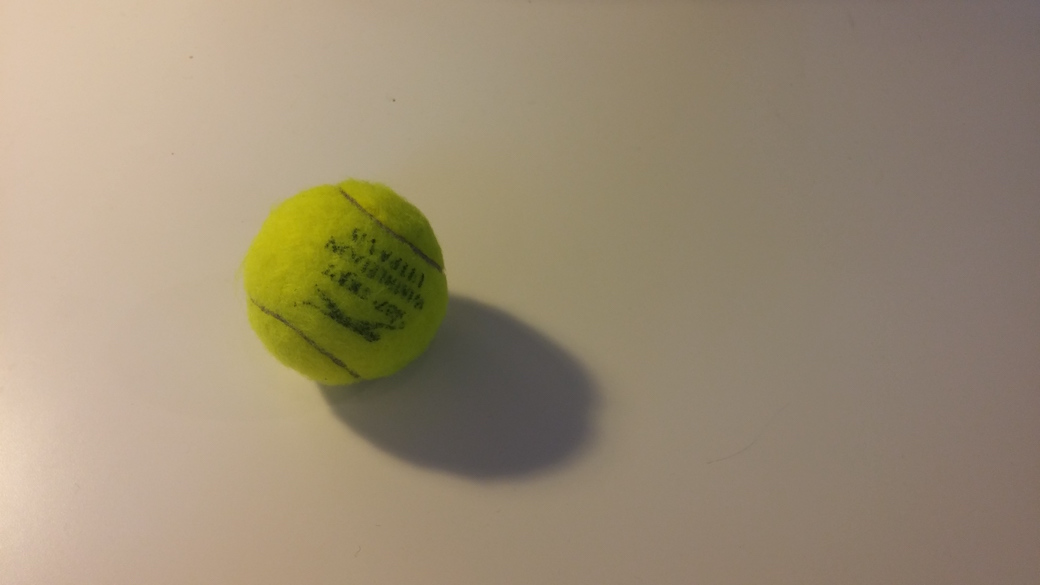

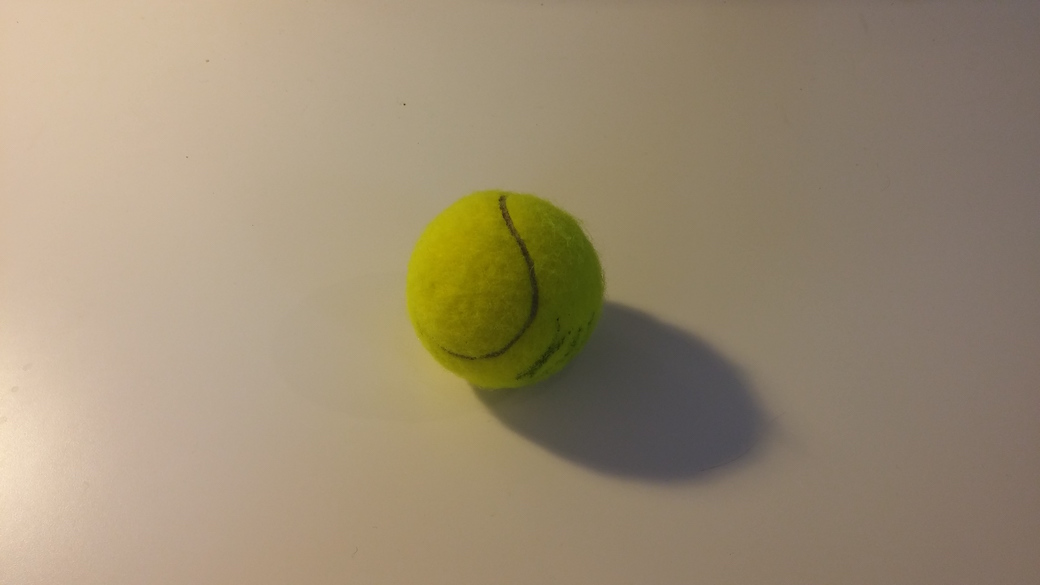

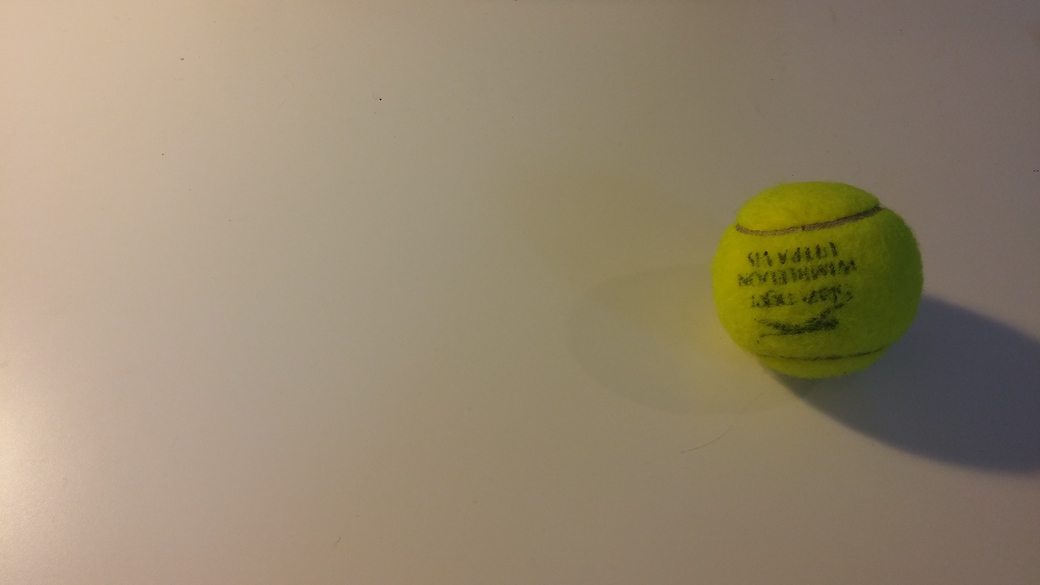

So we’ve got two consecutive video frames, and using background subtraction we can easily highlight the pixels that have changed intensity between them. Movement of foreground objects will cause pixels to change colour, so our background subtraction will highlight movement. Makes sense, let’s use a tennis ball:

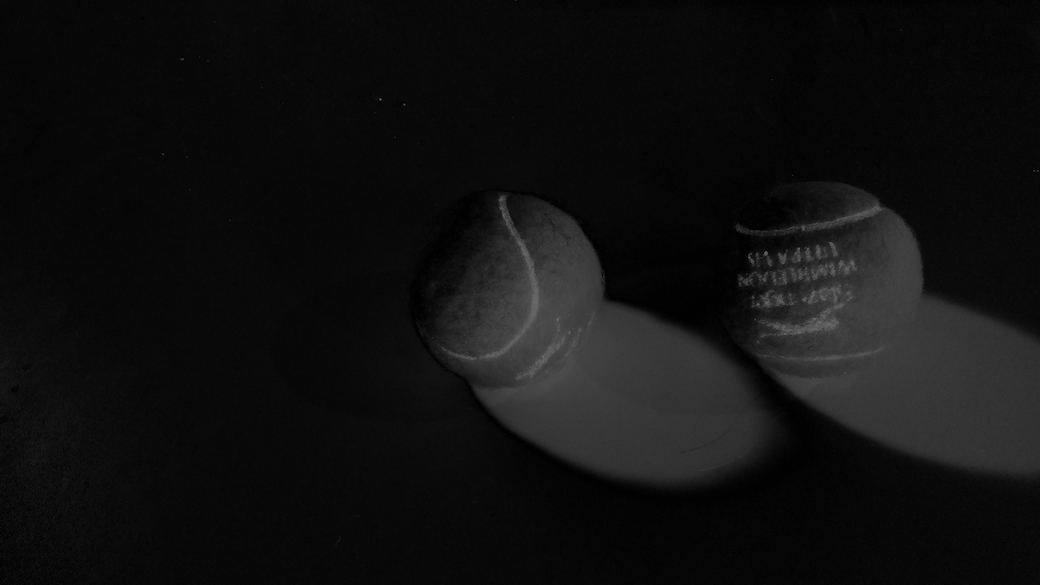

Now that our foreground is moving, notice how adjacent-frame background subtraction actually highlights two regions of ‘movement’ - one region where the object has moved to, and one where it has moved from. The pixels change colour/intensity in both areas, from background to foreground in the first and from foreground to background in the second - but background subtraction doesn’t care. If the pixel changes value, we have to assume it’s foreground.

In some cases, this kind of movement detection might be useful. But let’s say we want to know quite accurately where the tennis ball is in any given frame - we really need to get rid of that ghost image from the previous frame.

Pairwise Frame Subtraction, or Three-Frame Differencing

We can solve this problem by using pairwise frame subtraction.

I’m not totally sure if I’m using the right terminology, as I’ve also heard this technique referred to as three-frame differencing and temporal frame subtraction. But whatever you want to call it, the idea is to use three consecutive frames to isolate movement in the middle frame.

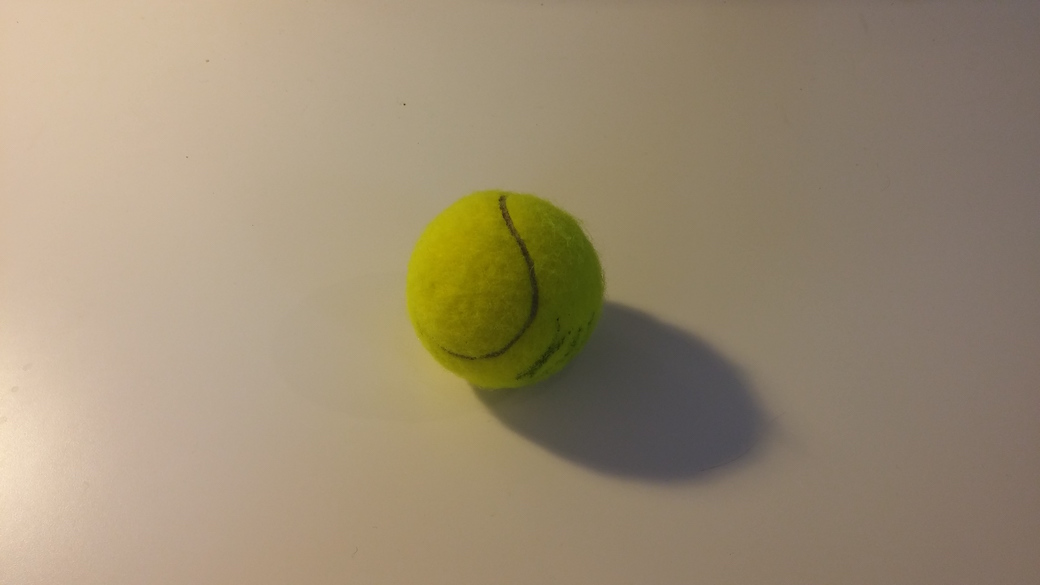

Take three consecutive video frames, f0, f1 and f2.

First we find the difference image of f0 and f1, which represents any pixels that have changed intensity significantly in between the first two frames. Independently, we find the difference image of f1 and f2, representing any pixels that have changed significantly between the second pair of frames.

Finally, we look at the overlap between the two difference images:

overlap = cv2.bitwise_and(difference_image_1, difference_image_2)

So there you have it, by only selecting pixels that changed both on the transition from frame 0 to frame 1, and also on the transition from frame 1 to frame 2, we can isolate whatever was moving in the central frame.

Notes

-

The images above are a decent illustration of why lighting can be so problematic. We’re detecting as much shadow movement as we are object movement. I probably should have used images of a coin on some paper or something.

-

Look carefully at the difference image between frames 0 and 1. See how the ball hasn’t moved all that far, and actually partly overlaps it’s previous position. This causes a hole in our foreground detection, where the pixels haven’t changed value significantly between frames (they’re tennis ball coloured in both frames).